Digital security experts around the world have their eyes fixed on the Y2Q—“Years to Quantum”—clock. It ticks down the time until the projected date when a quantum computer will be able to break an essential form of modern cryptography. Called public-key cryptography because of its ingenious method of sharing secret codes in public, it keeps your credit card number safe when you shop online and ensures that your phone's software update is coming from the phone company and not a hacker. Whistleblowers use it to contact journalists, and businesses use it to send confidential files.

But a quantum computer would render the standard types of public-key cryptography useless. “This is really very serious,” says Bruno Huttner, co-chair of the Quantum-Safe Security Working Group at the Cloud Security Alliance. “If there was a quantum computer tomorrow, we wouldn't be able to talk together with any kind of security.”

Huttner is one of the creators of the Y2Q clock, named in analogy to the Y2K crisis of the late 1990s. Twentieth-century software programs had denoted years by only two digits, which meant that to computers, 2000 was “00”—the same as 1900. Programs involving such a date were expected to malfunction when the new millennium arrived, causing potentially massive disruptions. But in the end, no banks collapsed, no power grids shut down and no airplanes fell from the sky when the year changed. The transition was seamless—mostly because businesses and governments had raced to fix the Y2K bug.

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

No one knows exactly when a quantum computer large enough to break cryptographic standards will be developed. The current end date on the Y2Q clock, April 14, 2030, is just a guess. But most researchers believe the shift will happen within the next few decades. “The threat is coming,” Huttner says, and the Y2Q clock is a reminder. “Putting a date on it helps people to focus.”

For governments and other institutions that need to keep secrets for the long term, the real deadline is much sooner. If encrypted data sent today get stored, then a future quantum computer could retroactively decrypt the messages. “If you need to keep a secret for 20 years, and you think that quantum computers that break your cryptography might emerge within 20 years, you have a problem today,” says computer scientist Chris Peikert of the University of Michigan.

Anticipating this threat, the National Institute of Standards and Technology (NIST) initiated a public contest in 2016. It solicited ideas for “post-quantum” or “quantum-resistant” cryptography—codes that can run on today's computers but are so robust that not even quantum computers could break them. Underscoring the urgency, in December 2022 the U.S. Congress passed the Quantum Computer Cybersecurity Preparedness Act, which requires government agencies to create a plan for transitioning to such algorithms.

Four rounds of submissions and appraisals later, NIST selected a winner, called CRYSTALS-Kyber, in the category of public-key encryption and three winners in the category of digital signatures, used for securely identifying the sender of a message. NIST is now working with researchers to standardize the winning algorithms so programmers can start laying the foundations of quantum-proof secret-keeping.

Somewhat worryingly, however, three of the four selected algorithms, including CRYSTALS-Kyber, are based on the mathematics of lattices. Experts are confident that these are very hard problems to solve—but no one can guarantee that a future breakthrough won't crack them open.

One of the earliest known forms of cryptography is a cipher that was used to substitute letters in a piece of writing. In his messages, Julius Caesar replaced each letter with one three positions away in the Roman alphabet. In English, that would mean “a” becomes “d,” “b” becomes “e,” and so on. To decrypt a message from Caesar, you would simply reverse the process, shifting the letters by three alphabetical positions.

There are endless variations of Caesar's substitution scheme—children passing notes in class could create their own, replacing “a” with a heart, “b” with a star, and so on—but they are easy to break. A teacher who confiscates a child's note might notice that it contains many isolated triangles, representing a one-letter word, and deduce that the triangle stands for “I” or “a.” Code breakers can usually solve more complicated substitution schemes by comparing the frequency of different symbols with those of letters in common English texts.

The gold standard in modern cryptography, known as the Advanced Encryption Standard, or AES, dramatically expands on Caesar's approach. It scrambles the message by repeatedly substituting the entries and shuffling them like a deck of playing cards. After enough shuffles and substitutions, it's very difficult to reconstruct the original version.

To decrypt the message, you would have to unscramble it by undoing each shuffle and substitution. With a physical deck of cards, this is nearly impossible—the shuffling order is determined by imperceptibly slight movements. But a computer shuffles the message according to a precise set of instructions—for example, “move the second entry into the fifth spot”—that are easy to undo. The computer simply follows the instructions in reverse: “move the fifth entry into the second spot.”

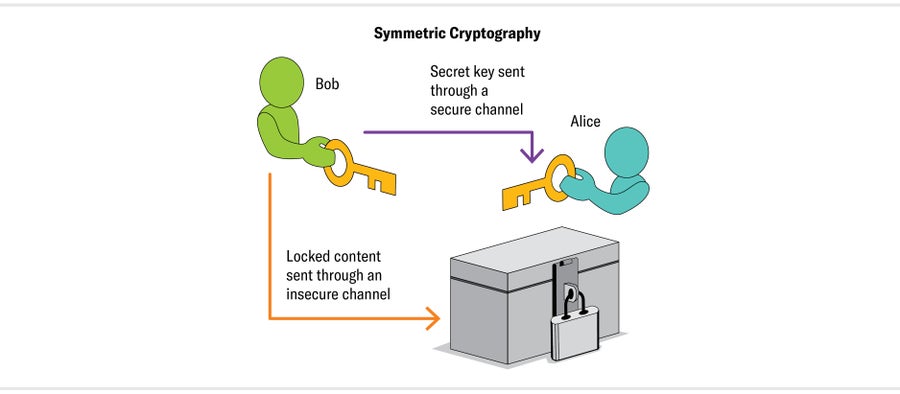

Like Caesar's cipher, AES has symmetrical procedures for encrypting and decrypting. It applies the same process forward and backward, just like twisting a key in opposite directions to lock and unlock a door. Until the 1970s, so-called symmetric cryptography (also known as symmetric-key cryptography) was the only type of cryptography. But it has a major limitation: the sender and receiver need to agree on the procedure for encryption and decryption before exchanging any messages, either in person or through a trusted, separate mode of communication.

Credit: Jen Christiansen

It's hard to imagine an alternative to symmetric cryptography without this constraint. In 1974, when University of California, Berkeley, undergraduate student Ralph Merkle proposed a class project investigating methods for “two people to communicate securely without having made any prior arrangements,” he anticipated how outrageous the idea might seem and added, “No, I am not joking.” Merkle envisioned a system in which two people exchange messages entirely in the open, always assuming someone is listening. Through this public correspondence, they manage to establish a scheme for coding and decoding and use it to send secret messages. If someone else were reading the messages, they wouldn't be able to figure out the scheme. Merkle's proposal was rejected by an expert for having “unrealistic working assumptions.”

Remarkably, however, several mathematics papers realized Merkle's vision just a few years later. Two of the proposed algorithms, called Diffie-Hellman and RSA (short for Rivest-Shamir-Adleman, the surnames of its creators), are ubiquitous in modern communications. As it turns out, even before Merkle's class project, researchers at a British intelligence organization had discovered such coding—public-key cryptography—and kept it a secret.

When you check your bank account online, your computer and the bank's server are exchanging messages: you send your password, and the bank sends your balance. While that information moves through the Internet, someone else could read it. The messages need to be encrypted.

Most messages are encrypted with symmetric cryptography, such as AES, which quickly and efficiently scrambles them. But first your computer and the communicating server need to agree on the specific scrambling procedure. They can't simply write it down, because all their communications could be captured by an eavesdropper. They need to use public-key cryptography.

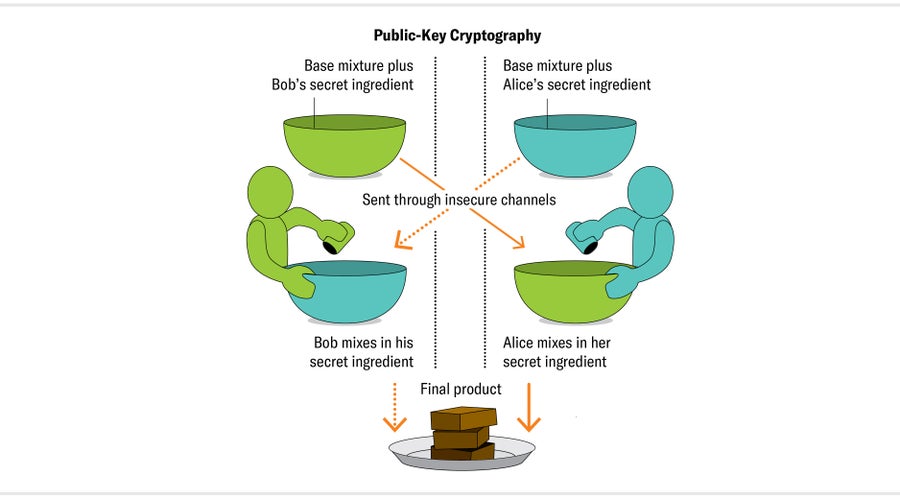

To understand how this process works, let's imagine that two friends, Alice and Bob, own a bakery with a top-secret brownie recipe. It's so secret that even Alice and Bob don't know the full recipe. They each add a special ingredient known only to the person who adds it. To create the brownies, Alice and Bob trade off days starting the recipe. Sometimes Alice mixes up basic ingredients with her secret one and sends the batter to Bob, who adds his secret ingredient and bakes it. Other times Bob first combines the basic ingredients with his secret one before shipping it to Alice, who mixes in her secret ingredient and bakes the brownies.

Credit: Jen Christiansen

Alice and Bob always end up with the same brownies—but they never share the full, exact ingredients with anyone, including each other. Even their conniving delivery driver, Eve, can't figure out the recipe. (In cryptography, the two people exchanging secrets are traditionally named Alice and Bob, and a potential spy is often named Eve.) Eve can't deduce the secret ingredients, because whenever she transports them, they're mixed in with all the basic ingredients—it is impossible to separate them.

Of course, computers don't bake brownies. They work with numbers and math. In real public-key cryptography, the goal is to end up with a shared secret number—something like a temporary password that grants access to a private conversation. The two computers can then continue to have an encrypted conversation using symmetric cryptography such as AES.

Different types of public-key cryptography create and share the temporary password in different ways. Alice and Bob hid their brownie recipe from Eve by mixing the ingredients before transporting them. Someone implementing public-key cryptography would instead use mathematical functions to blend secret numbers.

Functions are like machines that take in numbers, churn them up and spit out a new number. The functions used in public-key cryptography are very particular. They need to mix up numbers easily while making them very hard to unmix. Even if Eve sees the output of the function, she shouldn't be able to guess which secret numbers went in as input.

RSA cryptography, for example, is based on the multiplication function and its opposite, factoring. Mixing numbers by multiplying them is relatively easy for a computer even if the numbers are very large. But undoing multiplication, or factoring, is very hard if the numbers are large. (Factoring means answering the question, What numbers do I have to multiply together to get this number? For example, factoring 21 yields three and seven.) Decrypting a password created with RSA would require factoring a large number. The best methods involve filtering through many numbers to find a particular combination of them—which takes a computer a very long time.

“Rather than trying to make cryptographic schemes more and more complicated,” says computer scientist Boaz Barak of Harvard University, “we have actually moved to cryptography that is based on very, very simple things like integer factoring, which has been studied for thousands of years.”

In 1994 applied mathematician Peter Shor, then a research scientist at Bell Labs, discovered a way in which a quantum computer could break any code encrypted with RSA or Diffie-Hellman. As Shor told me, he had attended a talk about using quantum computers to solve math problems with a periodic, or repeating, structure. It reminded him of the “discrete logarithm” problem. A logarithmic function is the inverse of an exponential function—for example, finding x in the equation 2x = 16.

Usually it's easy to find the logarithm, but the discrete logarithm problem is about computing the logarithm using alternative forms of arithmetic in which one counts in a circle, like on a clock. Just as RSA is based on factoring, Diffie-Hellman is based on the discrete logarithm problem. Computer scientists generally believe that there is no quick way to find the discrete logarithm with a classical computer. But Shor found a way to do it on a quantum computer. He then applied similar logic to show how to use a quantum computer to quickly factor large numbers. Together these solutions are known as Shor's algorithm.

Shor wasn't imagining programming a real quantum computer—he was simply doing math on chalkboards and paper. “At the time quantum computers seemed like they were way, way far in the future,” Shor says. “So mainly I was thinking that it was a very nice mathematical theorem.” But his algorithm has major implications for public-key cryptography. A quantum computer could use it to break almost all cryptographic systems currently in use.

Classical computers run on long strings of 0s and 1s known as bits, but quantum computers use qubits, a portmanteau of “quantum” and “bit.” Qubits can be in a superposition—strange combinations of 0s and 1s. By hovering between the two states, qubits enable quantum computers to perform certain tasks much faster than classical computers. But quantum computers are finicky. The qubits need to maintain a superposition while the algorithm is running, but they tend to “collapse” into a string of 0s and 1s.

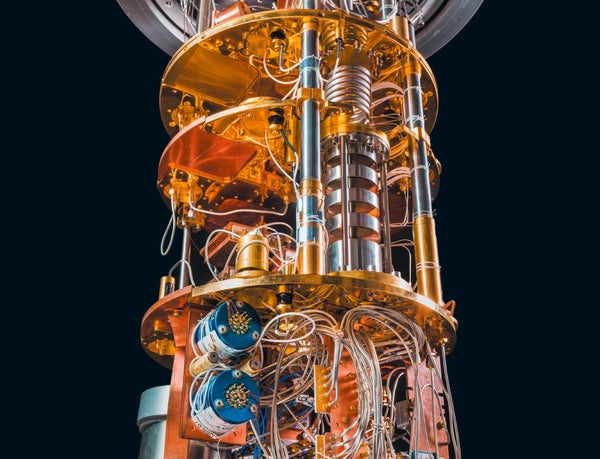

Quantum computers look impressive—they dangle from the ceiling like massive gold chandeliers—but they're still not very powerful. Scientists have been able to run computations with only a modest number of qubits, and the largest number they have factored on a quantum computer using Shor's algorithm is 21. In 2012 researchers at the University of Bristol in England used a quantum computer to deduce that 21 is three times seven.

Many experts believe that a quantum computer large enough to break RSA and Diffie-Hellman will be built within the next few decades, but they're quick to admit that the time line is uncertain. For cryptographers, who need to race ahead of quantum computers, the uncertainty is concerning. “Each industry has a certain aspect of their work that makes it very significant for them,” says Ray Harishankar of IBM. Health-care companies need to secure the data they use in medical research, and power companies must protect the electric grid from hackers. “Worst-case scenario: if something bad happens, they're totally exposed,” Harishankar says.

Every type of public-key cryptography is grounded in a hard math problem. To secure secrets against a quantum future, researchers need to use problems so hard that even a quantum computer cannot solve them in a reasonable amount of time. The NIST challenge sought nominations for public-key cryptographic algorithms that could be widely implemented on standard computers as alternatives to RSA and Diffie-Hellman. The many different connected systems and devices that people use must all “talk this new type of cryptography with one another,” says Lily Chen, a mathematician at NIST.

Before the deadline in 2017, researchers submitted 82 different proposals for post-quantum cryptography. (Confusingly, “quantum cryptography” refers to something else—using quantum phenomena as part of the security scheme.) Over the next year researchers tested the algorithms, and then NIST experts selected 26 algorithms that would continue to the next round.

Public input is an essential part of the NIST contest. Cryptographic systems aren't guaranteed to be secure, so researchers try to poke holes in them. One of the candidate algorithms used “isogeny-based” cryptography, which had been studied for a decade and seemed promising. But two researchers noticed that a 25-year-old mathematics theorem could be used to crack that algorithm. It took them just one hour using a standard laptop.

NIST experts eventually settled on several finalist algorithms, most of which were based on lattice mathematics. “Lattices were a natural choice,” says Vadim Lyubashevsky of IBM, one of the authors of CRYSTALS-Kyber. “People have been working on this in various guises for more than two decades already.”

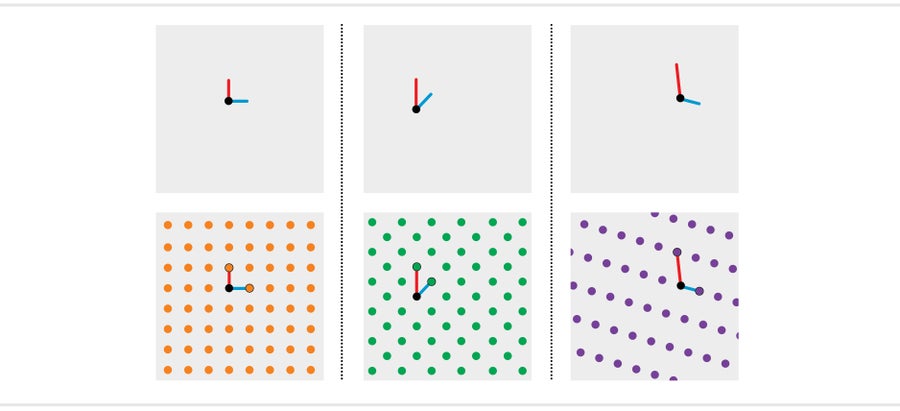

A lattice is a repeating array of dots. One of the simplest looks like a pegboard—dots arranged in a square grid. Mathematicians think of this lattice as being constructed from two foundational lines: a vertical one and horizontal one of equal length. Imagine drawing a point in the center of a piece of paper, drawing a series of vertical or horizontal lines, all of equal length, extending out from that center and marking the points where the chains of lines end. If you repeat these steps for all possible chains, the dots will form a square grid. Different sets of initial lines create different lattices. The two lines may have unequal lengths, or instead of being perfectly horizontal or vertical, they could be tilted at an angle. Drawing chains of such lines would still yield a repetitive pattern of dots but with the rows and columns offset or of different heights.

Credit: Jen Christiansen

Lattices are the backdrop for some deceptively tricky math problems. Suppose I draw two lines on a piece of paper and tell you that they are the building blocks of a lattice. Then I draw a single dot somewhere on the page. Could you find the lattice point that is closest to that dot?

Credit: Jen Christiansen

You could probably use the foundational lines to start drawing the lattice points and eventually find the closest one. But that would be possible only because the lattice on the paper is two-dimensional. Our visual imaginations are generally limited to three dimensions, but mathematicians can describe lattices with hundreds of dimensions. In those it is very difficult to find the nearest lattice point.

Researchers use such massive lattices to build cryptographic systems. For example, start with a 1,000-dimensional lattice. Out of this sea of dots, select a single point. The precise location of this point represents the secret message. Then wiggle a little bit away from this point, floating off the lattice into the ambient space. You can share the new location publicly without giving away the location of the secret point—finding nearby lattice points is a very hard math problem. Just like mixing the ingredients protects the brownie recipe, wiggling away from the secret point obscures its precise location.

Computer scientists have been studying such problems for decades and are reasonably confident that they're very hard to solve. But when designing a new algorithm, cryptographers need to balance security with many other concerns, such as the amount of information two computers need to exchange and the difficulty of the computation required to encrypt and decrypt messages. In this respect, lattice-based cryptography excels. “Lattices fit into this sweet spot where everything is reasonable—nothing is too bad, nothing is too good,” Peikert says. “It's sort of like Goldilocks.”

The problem is, no one can guarantee that lattice-based cryptography will always be secure. To guard against a mathematical breakthrough solving the underlying problem—and breaking the code—cryptographers need access to a variety of algorithm types. But three of the four finalists in the NIST process were lattice-based algorithms. The only one selected for standardization in the general public-key encryption category was CRYSTALS-Kyber.

To protect their fragile quantum behavior, quantum computers must be isolated from their environments and supercooled. The hanging apparatus enables the computer, which is encased in a compartment at the bottom, to be cooled. Credit: Christopher Payne/Esto

The NIST contest also has a category for digital-signature algorithms, which provide guarantees about who sent the message and that it wasn't modified. Encryption algorithms answer the question, “Do I know that no one else will be reading this?” explains cryptographer Britta Hale of the Naval Postgraduate School in Monterey, Calif., whereas digital signatures answer the question, “Can I trust these data have not been modified?” The digital-signature algorithms currently in use are also vulnerable to Shor's algorithm. NIST chose to standardize three digital-signature algorithms, two of which are lattice-based.

Such heavy reliance on a single type of math problem is risky. No one can be certain that mathematicians won't eventually crack it. Nor does it give users any flexibility—it could turn out that another type of cryptography fits their specific needs better. For these reasons, NIST has extended the standardization process in both categories to study algorithms that are not lattice-based. “Our goal in this is not to depend on any one mathematical family for the algorithms we select,” explains Dustin Moody, a mathematician at NIST.

Even the algorithms already selected for standardization needed to be tweaked along the way. After the first round of submissions, researchers noticed that CRYSTALS-Kyber had a small issue, which the authors addressed. And during a later round of the contest, they found a way to slightly improve the algorithm. “We changed the parameters to get a few more bits of security,” says Peter Schwabe of the Max Planck Institute for Security and Privacy in Bochum, Germany, who is one of the creators of CRYSTALS-Kyber.

NIST is now in the process of setting the standards, which describe in step-by-step detail how computer programmers should implement the algorithms. “Everything on the Internet must have superspecific, superboring standards with every little detail. Otherwise computers just can't talk to one another,” Lyubashevsky says. After the standards are set, every computer system needs to switch to post-quantum cryptography. There is no one moment when everyone flips a switch. Individual software companies need to upgrade their protocols, governments need to change their requirements, and physical hardware devices need to be swapped out.

It will probably take many years, if not decades, to fully transition to post-quantum cryptography. Until that happens, any messages sent with the old forms of cryptography will be potentially readable with a future quantum computer. Depending on how long you're hoping to keep a secret, the time for concern might already have passed. As Hale says, “On the cryptographic side, everyone is looking at their watches, saying, ‘You're past due.'”