Artificial intelligence (AI) has potential to play a pivotal role in many areas of medicine. In particular, the use of deep learning to analyse medical images and improve the accuracy of disease diagnosis is a rapidly growing area of interest. But AI is not perfect. A new study has revealed that radiograph labels can confuse AI networks and limit their clinical utility.

The problem arises due to a phenomenon called hidden stratification, in which convolutional neural networks (CNN) trained to analyse medical images learn to classify the image based on diagnostically irrelevant features.

For example, a neural network trained to diagnose malignant skin lesions was discovered to actually be looking for the presence of a ruler, included for scale in images of cancerous lesions. Elsewhere, CNNs trained to detect pneumothorax (collapsed lung) on chest radiographs used the presence of a chest tube as a shortcut to identify such events, resulting in missed diagnoses if no tube was present. Other confounding features include arrows on the image or radiograph labels – commonly used to identify the radiographer or distinguish right from left on an X-ray image.

In this latest study, Paul Yi, director of the University of Maryland Medical Intelligent Imaging (UM2ii) Center, and collaborators assessed how radiograph labels impact CNN training, using images from Stanford’s MURA dataset of musculoskeletal radiographs. They hypothesized that covering up such labels could help direct the CNN’s attention towards relevant anatomic features.

The researchers used 40,561 upper-extremity radiographs to train three DenseNet-121 CNN classifiers to differentiate normal from abnormal images. They assessed three types of input data: original images containing both anatomy and labels; images with the labels covered by a black box; and the extracted labels alone.

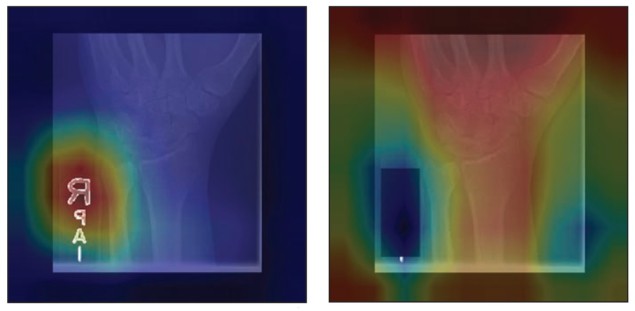

For each CNN, a board-certified musculoskeletal radiologist inspected heatmaps of 500 test images to identify which features the CNNs emphasized. The researchers found that CNNs trained on the original images focused on the radiograph labels in 89% of the 500 heatmaps. When the labels were covered, in 91% of the heatmaps, the CNNs shifted the emphasis back towards anatomic features such as bones.

The team also assessed the area under the curve (AUC), a measure of how well the algorithm performs, for the three training regimes. For CNNs trained on original images, the AUC was 0.844; this increased to 0.857 for images with covered labels. A CNN trained on radiograph labels alone diagnosed abnormalities with an AUC of 0.638, a greater-than-chance accuracy. This indicates the presence of hidden stratification, with some labels more associated with abnormalities than others.

Machine-learning models that detect COVID-19 on chest X-rays are not suitable for clinical use

The researchers conclude that CNNs are susceptible to confounding image features and should be screened for this limitation prior to clinical deployment. “Because these labels are ubiquitous in radiographs, radiologists developing CNNs should recognize and pre-emptively address this pitfall,” they write. “Covering the labels represents one possible solution. In our study, this resulted in significantly improved model performance and orientation of attention towards the bones.”

“We are now actively working to better understand hidden stratification, both in identifying potential confounding factors for deep-learning algorithms in radiology and in developing methods to mitigate these confounders,” Yi tells Physics World. “The implications of these issues are huge for ensuring the safe and trustworthy implementation of AI and this initial study has only scratched the surface.”

- The researchers publish their findings in the American Journal of Roentgenology.